The Engineering Path for AI Agents Series (Part 1): Is AI Agent Dead? Long Live “Micro Agent”?

The AI Agent wave painted an incredibly enticing promise: just give AI a goal, and it’ll autonomously plan, execute tools, complete everything—like a tireless super intern. We seemed finally able to toss those tedious flowcharts and code, entering a whole new era of “self-growing software.”

But if you’ve hands-on built or deeply used such Agents, you’ll discover a cruel truth: when conversations exceed 10-20 turns, they “lose their way,” repeatedly calling failed tools, trapped in endless loops. Eventually, this highly anticipated “super intern” becomes an expensive, unreliable, frustrating “digital pet.”

What went wrong? Is the model not smart enough? Not at all. The problem is our architectural paradigm. This opening piece of “The Engineering Path for AI Agents” series will have my tech partner Tam take you back to software’s first principles, arguing why current popular “General Agents” are a wrong path, while the future belongs to smaller, more focused “Micro Agents.”

(This series is authored by my tech partner Tam—since he’s introverted and camera-shy, I’m publishing for him)

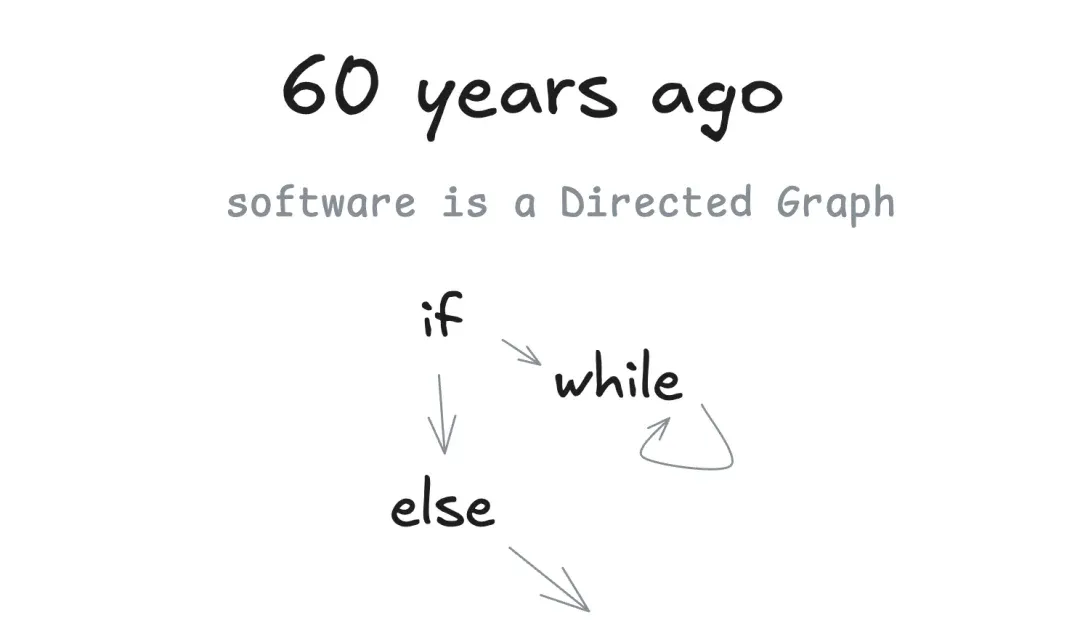

Chapter 1: Software’s Essence—A History Written in “Graphs”

To understand Agents’ future, we must first review software’s past. What is software’s essence? From an engineering perspective, software’s execution process is a Directed Graph. We first used flowcharts to represent it, clearly defining every operation and transition condition.

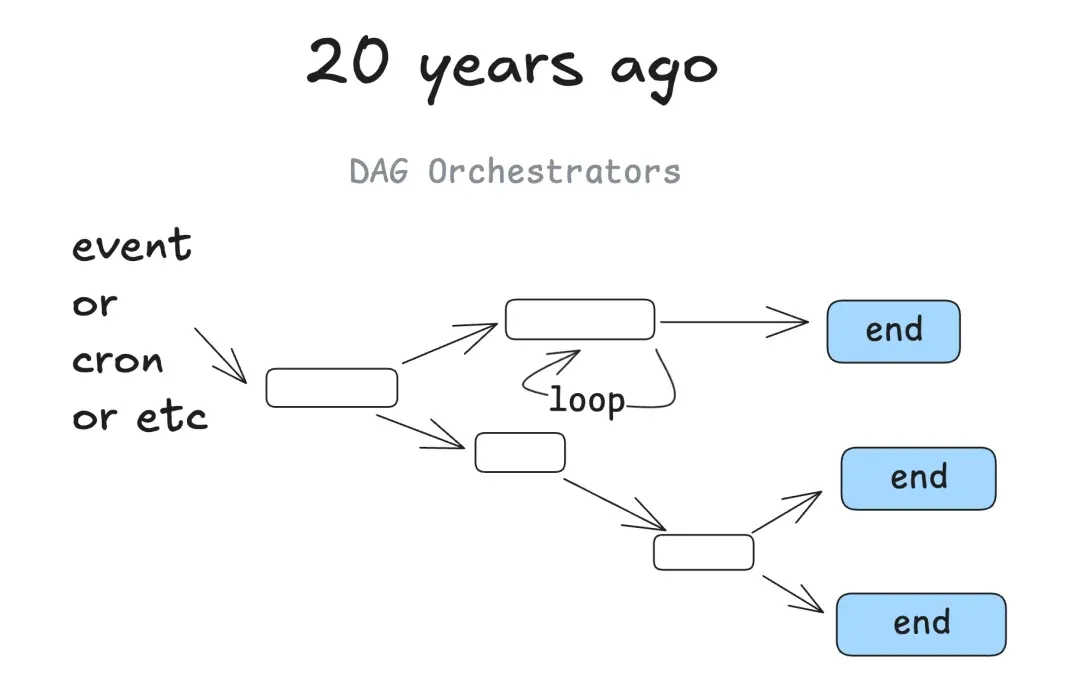

About 20 years ago, we had more powerful tools to manage these graphs—DAG (Directed Acyclic Graph) workflow orchestrators represented by Airflow and Prefect. They brought observability, modularity, retry mechanisms, etc., making complex, deterministic software systems reliable.

When machine learning models appeared, we simply added them as ordinary nodes in the graph. For example, a “text summarization” or “sentiment classification” ML model node receives input, produces output—the whole system remains deterministic and controllable.

Chapter 2: The Fatal Trap of “General Agent Loop”

The “General Agent” promise is to break this deterministic graph. It tries to make us discard preset flows, just give LLM a bunch of tools (graph’s “edges”), letting the model find paths at runtime (graph’s “nodes”). Sounds beautiful, but in practice, we quickly hit that fatal trap—long context failure.

In current popular Agent Loop patterns (like ReAct), every tool call and result gets appended to context history. As conversation progresses, this context grows ever longer, filled with various one-time “noise” irrelevant to current decisions. Eventually, LLM’s reasoning ability sharply declines—like being unable to hear instructions in a noisy room—it “loses direction,” repeatedly making mistakes.

A cruel fact you may have intuitively sensed: No matter how long the model’s supported context window, you always get better results with small, focused prompts and context.

An Agent that crashes 10% of the time absolutely cannot be delivered to customers. Therefore, attempting to build an all-solving “General Agent” is infeasible with current technology.

Chapter 3: New Paradigm—“Micro Agent” as “Intelligent Node”

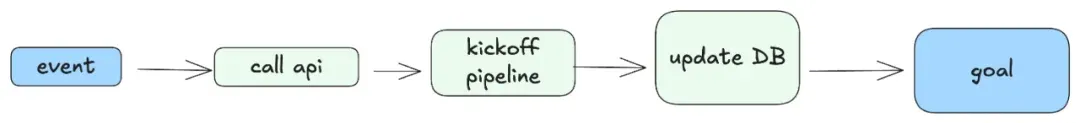

The truly effective method isn’t abandoning our decades-proven, reliable deterministic workflows, but “demoting” AI Agents—from trying to control everything as “supreme commander” back to being “intelligent nodes” responsible for specific tasks. This is the “Micro Agent” core idea.

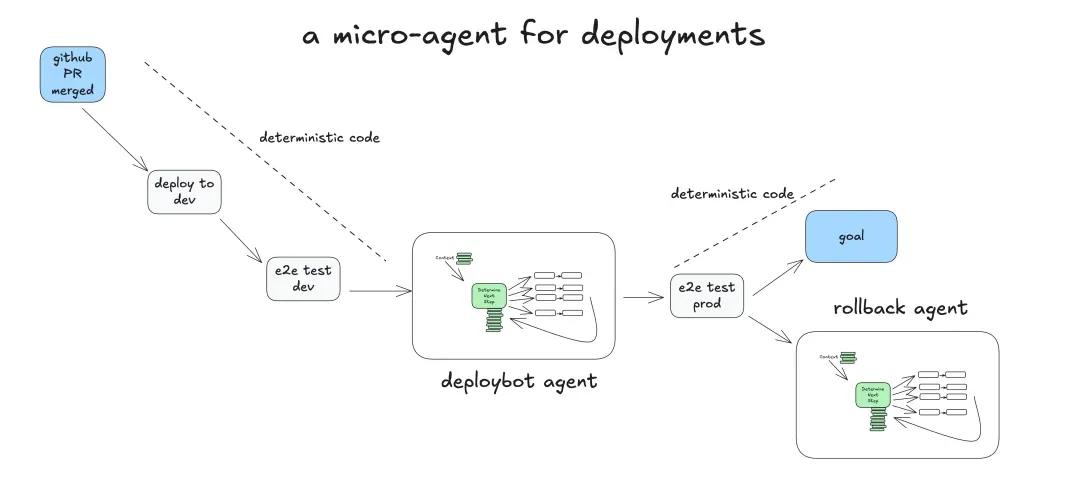

Let’s use a real deploybot (deployment robot) example:

-

Deterministic code auto-deploys to staging after PR merges to

main, running end-to-end tests. -

Micro Agent is activated, task: “Deploy SHA 4af9ec0 to production.”

-

Agent calls

deploy_frontend_to_prodtool, but gets intercepted by deterministic code, requesting human approval. -

Human declines, replying in natural language: “Can we deploy backend first?”

-

Micro Agent’s core value shines: It receives and understands this fuzzy human instruction, “translating” it into a structured new instruction, switching to call

deploy_backend_to_prodtool. -

Subsequent approval, execution, re-deploying frontend—all led by deterministic code, Agent only “translating” human-machine interaction when needed.

In this model, LLM’s powerful capability is used where it matters—handling unstructured human feedback. While the whole system’s robustness is guaranteed by our familiar deterministic code.

Returning to Engineering, Not Worshipping Magic

The path to production-grade AI applications isn’t hoping for an all-capable “magic black box.” Quite the opposite—it requires returning to decades-proven, rigorous software engineering principles.

We should decompose complex AI tasks like building microservices. Build our systems as robust, controllable, deterministic workflows, then strategically embed “Micro Agents” as efficient translators and experts at key nodes requiring “intelligence”—especially where fuzzy, unstructured input needs handling.

In this first piece of “The Engineering Path for AI Agents” series, we’ve established “Micro Agent” as the core architectural thinking. In upcoming articles, we’ll deep-dive into the core technology implementing this thinking: how to take absolute ownership of prompts, context, and control flow. Stay tuned.

Found this analysis insightful? Give it a thumbs up and share with more friends who need it!

Follow my channel to explore the infinite possibilities of AI, going global, and digital marketing together.