Word count: ~2600 words

Estimated reading time: ~10 minutes

Last updated: August 25, 2025

Is this article for you?

✅ You think deeply about AI Agent architecture and are confused by currently popular complex frameworks;

✅ You’ve been tortured by complex multi-Agent systems like LangChain, AutoGPT, and yearn to return to simplicity and essence;

✅ You believe the best Agent design should maximize model’s native intelligence, not pile on complex engineering scaffolding.

Core Principles

- Introduction: The “Tower of Babel” predicament in AI Agent field

- Principle 1 (Simple Loop): Why one main loop beats complex multi-Agent collaboration

- Principle 2 (Empower, Don’t Encapsulate): The LLM Search vs. RAG route debate

- Principle 3 (Structured Communication): “Compiling” human intent into machine language

- Principle 4 (Human-in-the-Loop): The best Agent is “tameable”

- Conclusion: Returning to “The Bitter Lesson”

1. Introduction: The “Tower of Babel” Predicament in AI Agent Field

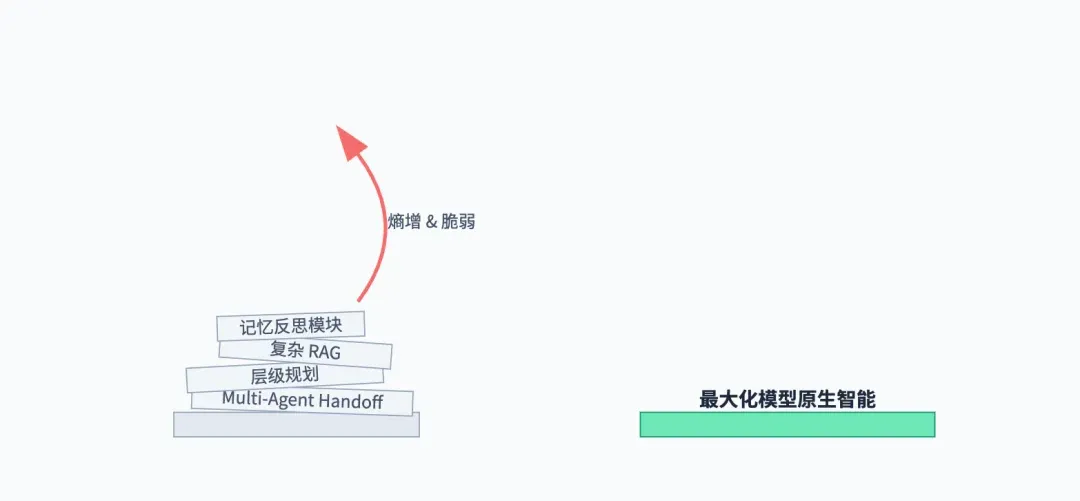

The current AI Agent field reminds me of the biblical “Tower of Babel” story. We’re obsessed with building increasingly complex systems — multi-Agent collaboration, hierarchical planning, memory reflection… Various frameworks emerge endlessly, trying to use elaborate engineering to build a tower reaching “artificial general intelligence.”

Yet the taller the tower, the more fragile the system, the harder to debug. We’ve introduced too many “middlemen” — complex Agent Handoff, inexplicable RAG retrieval, layer upon layer of nested Prompt chains. Each link adds system entropy, distorts and loses the model’s native intelligence. We seem further from our goal.

Claude Code’s emergence is like a fresh breeze. Its design philosophy runs counter to current “showoff” trends, returning to first principles of building Agents: maximize model’s native intelligence, minimize framework’s additional complexity. Today, I’ll deeply deconstruct the “minimalism” design philosophy behind Claude Code, and argue why this seemingly “simple” approach can beat most complex Multi-Agent systems at the current stage.

Principle 1: Simple Control Loop — Reliability Above All

Many current Agent frameworks are obsessed with building complex “collaboration graphs.” A PM Agent generates requirements, hands to Engineer Agent for coding, then QA Agent tests. Sounds beautiful, but in practice, every “handoff” is a massive information loss and potential error point.

Claude Code’s design is extremely restrained: It has only one main control loop, one flat message history. Even when handling hierarchical tasks, it only generates “at most one layer” of stateless sub-agents that immediately merge results back into the main loop after execution.

Why Is “Simple” Better?

1. Debuggability: Flat message history means you can clearly trace every step of Agent’s thinking. Complex multi-Agent systems’ internal states and communications are huge black boxes — once errors occur, they’re nearly impossible to debug.

2. Embrace Model Evolution: Complex frameworks essentially use engineering “patches” to compensate for current models’ “IQ” deficiencies. But these patches prevent you from enjoying future stronger models’ dividends. A simple loop maximally benefits from model iteration upgrades.

First Principle: Agent’s reliability and predictability matter far more than architecture’s “elegance.” When LLMs are still “probabilistic” entities, the simplest control structure is the most robust.

Principle 2: Empower, Don’t Encapsulate — The LLM Search vs. RAG Route Debate

How do you get an Agent to understand your codebase? Mainstream approach is RAG (Retrieval Augmented Generation): vectorize the codebase, store in a database, then perform semantic search. Sounds scientific, but Claude Code chose a “wilder” path: LLM Search.

It doesn’t mess with vector databases — it directly gives the most primitive command-line tools like grep, find, ls to the large model, letting the model decide how to explore the file system itself. It’s like teaching someone to fish rather than giving them fish.

Why Is “Empower” Better?

1. Avoid Information Abstraction Loss: RAG’s vectorization process is itself a “lossy compression” of information. LLM Search lets the model directly contact the most original code text, preserving all context and details.

2. Leverage Model’s Native Intelligence: What understands code best isn’t any embedding algorithm — it’s the large model itself. Letting the model understand code the way it’s best at, rather than forcing it to accept RAG’s “fed,” secondarily-processed information, is actually more efficient and accurate.

First Principle: Don’t try to “encapsulate” and “simplify” information for the model with complex engineering. Instead, give the model the most original, complete context and empower it to use basic tools for autonomous exploration. Trust the model’s intelligence, not your framework.

Principle 3: Structured Communication — “Compiling” Human Intent into Machine Language

Communication with LLMs is essentially a “noise reduction” campaign. Fuzzy natural language is full of ambiguity. Claude Code’s brilliance is its heavy use of XML tags and role-playing, structuring our intent into “instructions” the model can precisely understand.

<good-example>

pytest /foo/bar/tests

</good-example>

<bad-example>

cd /foo/bar && pytest tests

</bad-example>This isn’t just simple Prompt Engineering. It’s simulating a compiler’s work: “compiling” high-level human language (our requirements) into low-level, unambiguous, machine-executable intermediate code (Prompts with XML tags and roles).

Why Is “Structured” Better?

First Principle: LLM’s core capability is “pattern matching.” By providing structured input with clear boundaries (like XML tags), we greatly reduce the difficulty for models to recognize and execute our intent, thereby improving output stability and accuracy.

Principle 4: Human-in-the-Loop — The Best Agent Is “Tameable”

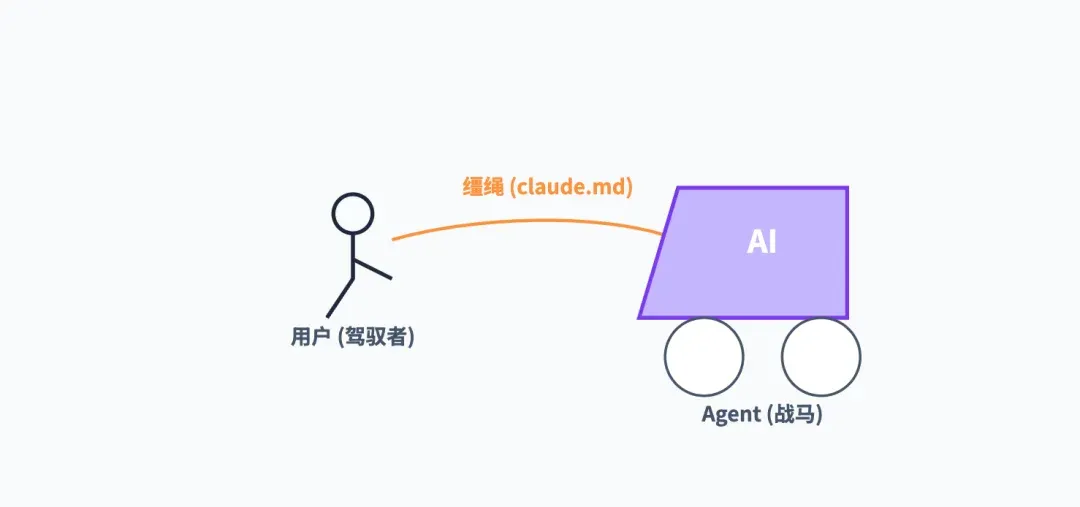

A huge misconception about current Agents is pursuing complete “autonomy.” Claude Code’s design everywhere reflects “Human-in-the-Loop” philosophy.

-

claude.mdfile: This is a brilliant design. It gives users a “steering wheel,” letting users define Agent’s behavior preferences, code conventions, forbidden areas, etc., through a persistent file. This transforms the Agent from a “black box” into a partner that can be “trained” and “shaped.” -

Todo List and Plan Mode: These mechanisms force Agents to “report plans” to users before taking large-scale actions. This gives users precious “veto power” and “correction power,” avoiding the tragedy of Agents working hard for half an hour only to head in the completely wrong direction.

Why Is “Tameable” Better?

First Principle: Before AGI arrives, AI Agent’s core value isn’t replacing humans but augmenting humans. A good Agent should be like a well-trained warhorse — powerful yet obedient; not an uncontrollable tiger. Controllability, predictability, and intervenability are key criteria for judging whether an Agent is “usable” at the current stage.

6. Conclusion: Returning to “The Bitter Lesson”

Computer scientist Rich Sutton has a famous article called “The Bitter Lesson.” Its core point: 70 years of AI research history tells us that complex methods trying to hard-code human knowledge into systems ultimately lost to general, scalable methods based on massive computation.

Today’s AI Agent field is replaying this story. Those complex Multi-Agent frameworks are like trying to “teach” AI how to think with elaborate engineering. Claude Code’s minimalism philosophy returns to the essence: trust and maximize the large model’s own powerful, general learning and reasoning capabilities, providing only the lightest, most direct guidance where necessary.

This, perhaps, is the true secret to building the next killer AI Agent.

Less is More!

Found Mr. Guo’s analysis insightful? Drop a 👍 and share with more friends who need systematic thinking!

Follow my channel to explore AI, going global, and digital marketing’s infinite possibilities together.

🌌 On the road to AGI, the most powerful lever is always simplicity and first principles.