Your User Research Is Outdated:

Anthropic’s AI Interviewed 1,250 People and Uncovered Data’s “Subconscious”

By Mr. Guo · Reading Time: 8 Min

A Quick Note

If you work in product or growth, you’re constantly torn between two extremes: Either run surveys — high volume (Quantitative), but shallow, all “fill-in-the-blank”; Or conduct user interviews — deep insights (Qualitative), but expensive, slow, and impossible to scale.

As someone who worships efficiency, I’ve always felt User Research is the least efficient black box in internet product development.

But Anthropic just released a Research Blog that flips the whole table. They built something called Anthropic Interviewer, turning Claude into a “professional interviewer” that conducted in-depth interviews with 1,250 professionals (scientists, creative workers, and general staff) in one go.

This isn’t just research — it’s a brutal demonstration of “Qualitative at Scale.”

Today, I’m breaking down this report to show you what humans actually reveal when AI starts asking the questions.

01

Methodology: How Does AI Become a “Seasoned Interviewer”?

Previously, using AI for research meant having it analyze survey results at most. But this time, Anthropic made AI fully proxy the entire interview process.

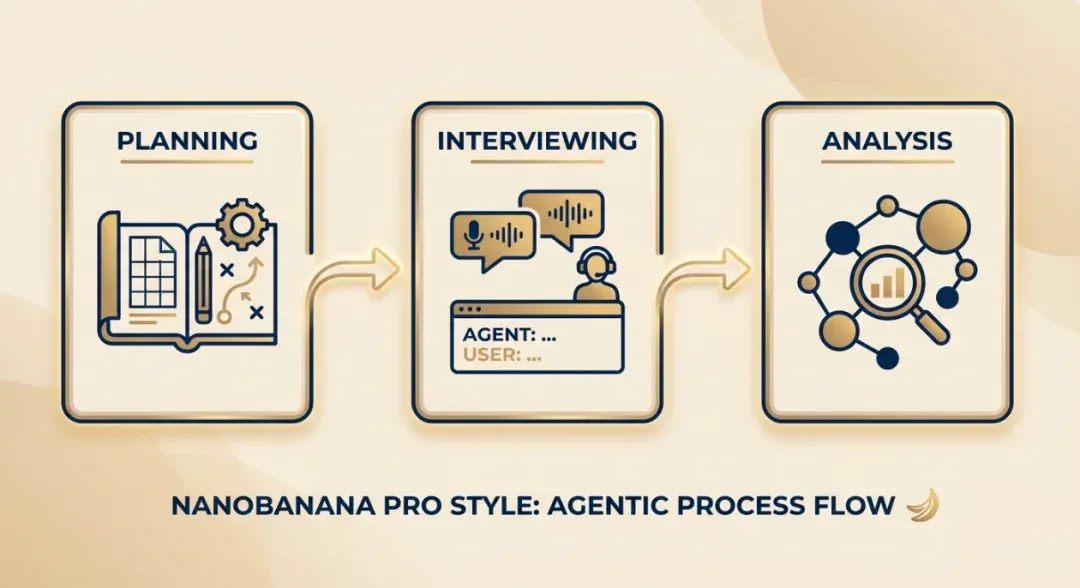

They broke it into three Agentic steps:

- Planning (The War Room)

: AI generates a dynamic interview outline based on research goals (like “understand how scientists use AI”), combined with System Prompts. It’s not a rigid questionnaire — it prepares “follow-up strategies” like a real person.

- Interviewing (The Interview Room)

: This is the brilliant part. AI conducts 10-15 minute real-time conversations with users in Claude.ai’s interface. It doesn’t just ask questions — it adaptively probes based on responses.

- User says: “I’m a bit worried.”

- AI follows up: “Are you specifically worried about career prospects, or creative control? Can you give an example?”

- Analysis (Intelligence HQ)

: After interviews end, AI digests all 1,250 transcripts, extracts “Emergent Themes,” and outputs reports combined with quantitative data.

Anthropic Interviewer’s Three-Step Agentic Process

My Take: This is how Agents should be used. Not for writing garbage marketing copy, but for “high-compute empathy” work like this.

02

Regular Workers’ Secret: “Shadow AI” and Workplace Shame

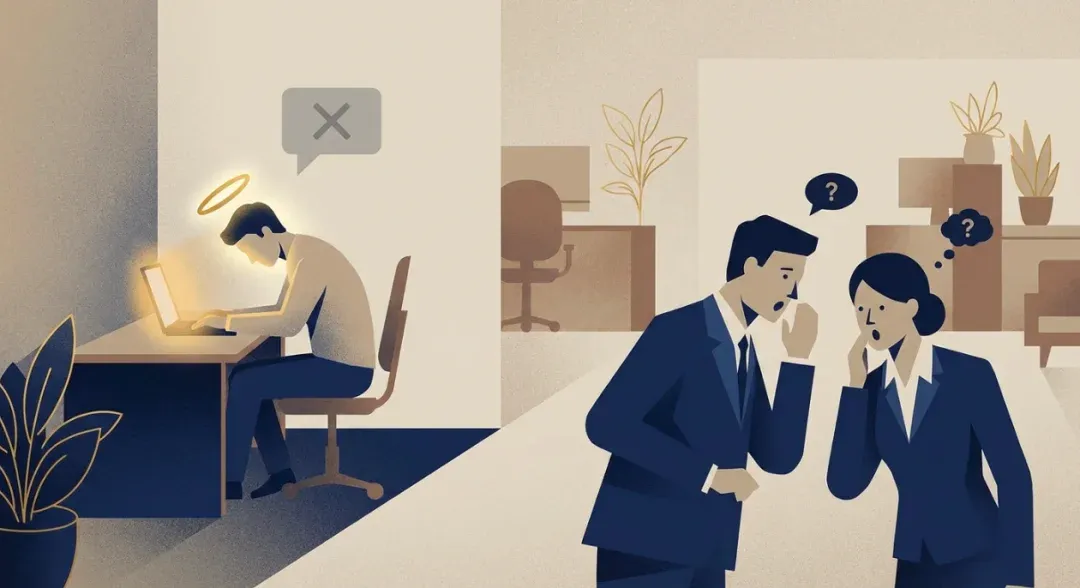

Interviews with 1,000 general workforce members revealed a fascinating workplace phenomenon: Shadow AI Usage.

Workplace “Shadow AI” and Social Stigma

- Surface Data

: 86% said AI saved time, 65% were satisfied with AI.

- Deeper Insight

: 69% admitted there’s “social stigma” around using AI at work.

A fact-checker told the AI: “I have colleagues who said they hate AI, so I just shut up. I never tell anyone about my workflow because I know how people view this stuff.”

What Does This Mean? Workplaces are forming a kind of “AI understanding”: everyone’s secretly using AI to boost efficiency (or slack off), but nobody dares mention it openly, fearing labels like “lazy” or “unprofessional.”

People aren’t being “replaced by AI” as doomsayers predicted — they’re becoming “centaurs”: keeping their core professional identity (the pastor still delivers sermons), but dumping all backend admin tasks to AI.

03

Creative Workers’ Struggle: “Productivity Poison”

Interviews with 125 creatives (writers, designers, musicians) painted a completely different picture.

It’s full of paradoxes:

- Extreme Efficiency

: 97% of creatives said AI saved time.

- Extreme Anxiety

: They’re trapped in an “Illusion of Control.”

A game scriptwriter confessed: “Calling it human-machine collaboration is mostly an illusion… rarely do I feel like I’m driving the creativity.” A musician said: “I hate admitting this, but that plugin really controls most of the process.”

My Insight: The creative class is experiencing a “soul crisis.” We used to think “creativity” was humanity’s last fortress. But now, creatives find themselves reduced to AI’s “reviewers” and “prompt engineers.” Output increased (from 2,000 words to 5,000 words daily), but that “creator god” feeling is fading.

04

Scientists’ Frustration: “I Wanted a Partner, You Gave Me a Secretary”

The scientist group (125 people) gave the most rational — and ironic — feedback.

- Ideal

: 91% of scientists want AI to help with hypothesis generation and experiment design. They want an Einstein-level AI partner.

- Reality

: They only dare use AI for coding, polishing papers, and literature searches.

- Obstacle

: Trust.

A biologist said bluntly: “If I have to check every detail AI says to prevent hallucinations, what’s the point?” Scientists don’t fear unemployment (too much tacit knowledge AI can’t learn), they fear being misled.

Current Conclusion: In science, AI remains a “smart secretary”, far from being “AI that’s also a scientist.”

05

Endgame Thinking: The “Industrial Revolution” of Qualitative Research

This Anthropic paper’s value isn’t discovering “everyone uses AI” — it’s proving the viability of tools like Anthropic Interviewer.

What does this mean for product managers and founders?

- New Paradigm for PMF Validation

: Previously, validating ideas meant intercepting people at Starbucks for surveys or posting on Reddit. Future: deploy an Interviewer Agent, chat with 500 potential users overnight, see the “pain point clustering report” the next morning.

- Affective Computing Lands

: We can finally quantify subtle emotional metrics like “anxiety,” “shame,” and “trust.”

- From “Q&A” to “Dialogue”

: Traditional surveys are “I ask, you answer” — static. AI Interviewer is “back and forth” — dynamic. Truth often hides in the third follow-up question. I’m Mr. Guo. If your product still relies on “guessing” what users want, or you’re still sending SurveyMonkey links nobody fills out. Time to wake up. AI is learning “mind reading,” while you’re still using an abacus.

(End)