By Mr. Guo

Foreword

Hey, it’s Mr. Guo. I recently got my hands on Opal, an experimental tool from Google Labs. My gut reaction? It turns something like n8n—traditionally a complex workflow builder—into a toy that anyone can play with.

These “AI LEGO kits” keep popping up. Do they make our purpose as creators clearer and freer? Or do they turn us into cheaper, more obedient parts within big-tech ecosystems? Instead of doing a superficial tool review, I want to peel this from first principles—through a product-manager lens. What’s Google’s strategy, what value does Opal create, and—most importantly—how do we use it to serve our own goals?

1. What Is Opal? A Toy That Offends Everyone

Opal is an AI agent builder incubated inside Google. Someone leaked it, giving us a peek into Google’s plan to democratize agents.

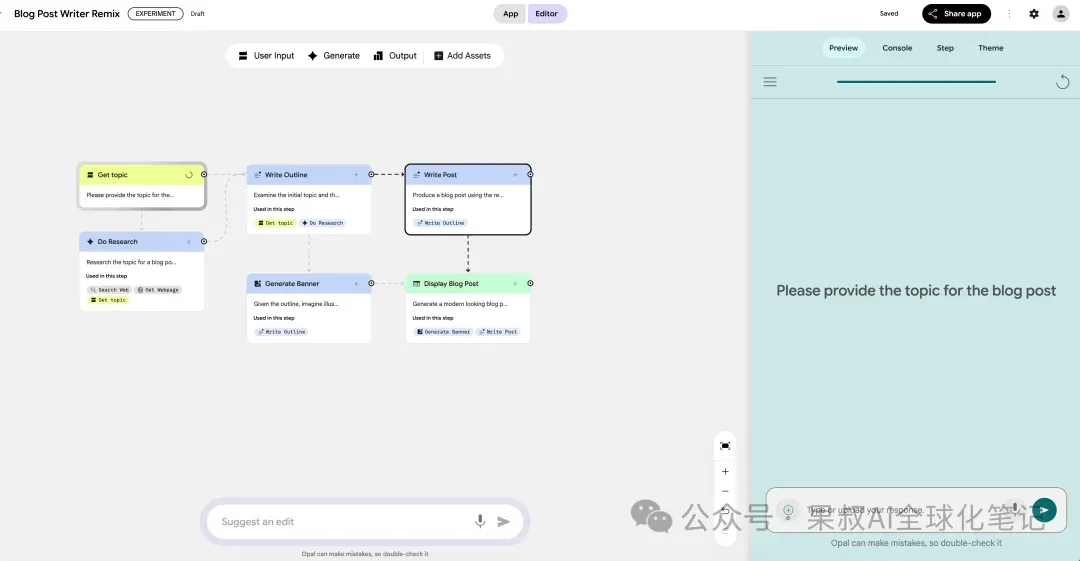

If you’ve touched Zapier, Make, OpenAI AgentKit, Jiandaoyun, or Notion Automation, the UI looks familiar: a node-and-connection visual workflow editor. Opal breaks a heavyweight agent task into drag-and-drop LEGO blocks:

- Start: defines how the agent is triggered.

- Text Input: a prompt/data field for users.

- Model: the “brain” (Google Gemini Pro). You set the system prompt, define the role, etc.

- Choices: branching logic so the agent isn’t a straight line but can split based on “yes/no” or other AI outputs.

- Output: where results (text, charts, JSON) get presented.

The twist: you don’t have to script each node. Core steps are pre-packaged agent subflows—Deep Research, for instance, is a native block. If you tried wiring Deep Research in n8n from scratch, you’d groan: find an open-source repo, wrap it, write scripts, integrate. Opal pushes “foolproof workflow” to the extreme.

So Opal ends up “offending” everyone:

- Senior developers scoff: “This is a toy. Can it do ReAct? Run multi-agents? Plug into vector DBs? No? Then why bother?”

- Non-technical operators groan: “What’s a Model? What’s Choices? I just want an automation that writes my weekly report!”

Yet that double offense hits the bull’s-eye: the middle layer—people who understand business logic, know a little tech, but don’t want to drown in code. That’s us: indie hackers, PMs, growth folks.

2. PMF Radar: What Pain Does Opal Really Solve?

Whenever I analyze a product, I pull out my PMF radar. Let’s run Opal through the scanner.

Problem: Size & Frequency

Opal isn’t solving “how to write code.” It’s solving “how to orchestrate AI.” In the agent era, the biggest pain is connecting AI capability to business workflow—the “last mile.”

You have Gemini as the brain. You have a SaaS subscription flow. How does the brain serve the flow? Example: “When a user signs up, automatically call AI, analyze the user, and tag them high/medium/low potential.”

Today that bridge either requires engineers stitching APIs (high-code) or Zapier-style automations (no-code but weak AI). Opal targets AI-native low-code orchestration. The problem is big and frequent.

Value Fit

Opal’s core value isn’t raw power; it’s speed. The most precious resource for indie builders is time—the opportunity cost of testing PMF. If I have an “AI logo design” idea, I don’t want to spend two weeks wrangling Python, LangChain, and Flask. I want a bare-minimum MVP in two hours to ship to ten seed users and see who pays.

Opal compresses AI MVP time-to-market from weeks to hours. It’s the agent-era version of a lean canvas—a vibe-check tool.

Monetization & NPS

Opal is practically pre-MVP. There’s no pricing page, so we can only speculate. Predictable plays:

- Upsell Gemini credits (“use more flows, pay for more tokens”).

- Workspace add-on (bundle into Google Workspace Enterprise).

- Developer marketplace (sell premium blocks/workflows).

The NPS bet? Give power users a “holy crap, I built this in 30 minutes” moment. Word-of-mouth first, monetization later—a classic Google tactic.

3. Hands-On: Building a Product Hunt Competitor Analysis

Enough theory—let’s build. I tried recreating a competitive analysis flow for Product Hunt using Opal.

Prompt

“I’m building a Product Hunt competitor analyzer…” (full prompt omitted for brevity in this summary)

What Opal Built

- Input form: a simple landing page where I paste a Product Hunt URL.

- Scraper: uses Google’s “Web Reader” agent to pull structured data (title, description, upvotes) without me touching the DOM.

- AI analysis: calls Gemini Pro with a system prompt to summarize, categorize, and flag differentiation.

- Actionable checklist: outputs a prioritized to-do list (“improve landing page clarity,” “highlight unique features,” etc.).

UX Notes

- Web Reader is the magic trick. I didn’t write a single line of scraping code.

- Outputs look like a polished dashboard—Opal pre-bakes clean cards, tags, and layout.

- It even prompts follow-up actions, e.g., “Want me to generate copy variations?”

Where It Breaks

- Fragile DOM: if Product Hunt changes markup, the flow fails. Not production-grade.

- No batch mode: handles one URL at a time. No apparent way to loop through 100 URLs.

- Black-box nodes: some blocks (e.g., Deep Research) are sealed—great for speed, but opaque for those who want to hack under the hood.

4. The Giants’ “Open Conspiracy”: GPTs vs. Copilot vs. Opal

Opal isn’t isolated. Compare it with OpenAI GPTs and Microsoft Copilot Studio:

- OpenAI GPTs: C-end, app-store mindset. Pure prompt-based, betting that natural language is the final programming language.

- Microsoft Copilot Studio: B-end, welded to Power Platform/Dynamics. Aimed at corporate analysts building internal copilots.

- Google Opal: Prosumer. More structured than GPTs (flows), lighter than Copilot Studio (no heavy stack).

The endgame isn’t “whose model is stronger.” It’s who builds the fastest distribution network for model capabilities. Opal is Gemini’s capillaries and nerve endings. It gets Gemini out of the chatbox and into workflows. That’s the real strategy: winning the agent-era equivalent of iOS/Android and the next app store.

5. How Indie Hackers Dance With LEGO

Is Opal good or bad for us? My take: it’s a gift. It isn’t here to replace you; it’s your unpaid, 24/7 technical co-founder.

My Checklist

- Treat it as an MVP validator, not production infra. Use Opal for 0→0.1. Once PMF looks promising, rebuild with heavy code.

- Obsess over workflow logic, not syntax. Your edge shifts from “I know Flask” to “I design the smartest competitor-analysis workflow.”

- Your value = defining the problem, not solving it. AI handles execution (“fetch data”). You handle intent (“why fetch? what next?”). We’re moving from full-stack devs to full-stack product architects.

- Mind platform lock-in, but leverage the early-stage perks. Sure, your Opal agent lives in Google’s world. But riding built-in Gemini, Web Reader, even Google Ads hooks for pennies? That’s smart parasitism.

6. Final Thought: Tools Change, Human Value Doesn’t

Schopenhauer said, “Humans are bundles of desire.” Opal, GPTs, Copilot—they simply lower the cost of satisfying that desire. They shift creation from expensive handcrafting (coding) to industrial assembly (drag-and-drop).

Great. That means we can finally spend 90% of our energy on “what to build” and “why,” not “how.” And that one question—what do you want to realize?—AI will never answer for you.

If this teardown sparked ideas, hit 👍 and share it with other builders. Follow my newsletter to explore AI, global growth, and digital strategy together.

🌌 We’re not just using tools—we’re becoming their orchestrators.