Word count: ~2800 words + 40-second video

Estimated reading time: ~10 minutes

Last updated: August 6, 2025

Is this article for you?

✅ You’re an AI content creator or video side-hustle explorer.

✅ You see viral AI videos and want to create similar styles but don’t know where to start.

✅ You’re eager for a ready-to-use, step-by-step “copy the homework” guide, not abstract theory.

✅ You’re interested in using AI for “creative industrialization” and “automated production.”

Chapter Contents

-

Preface: From “Creative Black Box” to “Engineering Blueprint”

-

Final Product: “Single-Generation” Replication Results

-

My “Fully Automated” Pipeline: Seven Steps to Replicate a Viral Hit

-

Why This Workflow Is a “Game Changer”

-

Strategic Insight: The Underestimated “Native Multimodal” Giant

-

Next Steps: From “Workflow” to “Automated Agent”

-

Conclusion: Your Next Product Starts with Deconstructing a Video

1. Preface: From “Creative Black Box” to “Engineering Blueprint”

Hey, I’m Mr. Guo. The AI video space has been red-hot lately — we constantly scroll past uniquely styled, mind-blowing short clips. Though video content creation isn’t my main gig, I still have thoughts like: “This is amazing — I want to make one too!” But the next thought is: “How was this even made?”

So I tried using Gemini’s multimodal capabilities to imitate and replicate. I’ll show you a workflow I personally validated — leveraging Gemini’s powerful multimodal understanding to transform “replicating viral videos” from an art form to a science anyone can learn. This isn’t just a tutorial; it’s an SOP for “creative industrialization.”

2. [Final Product] “Single-Generation” Replication Results

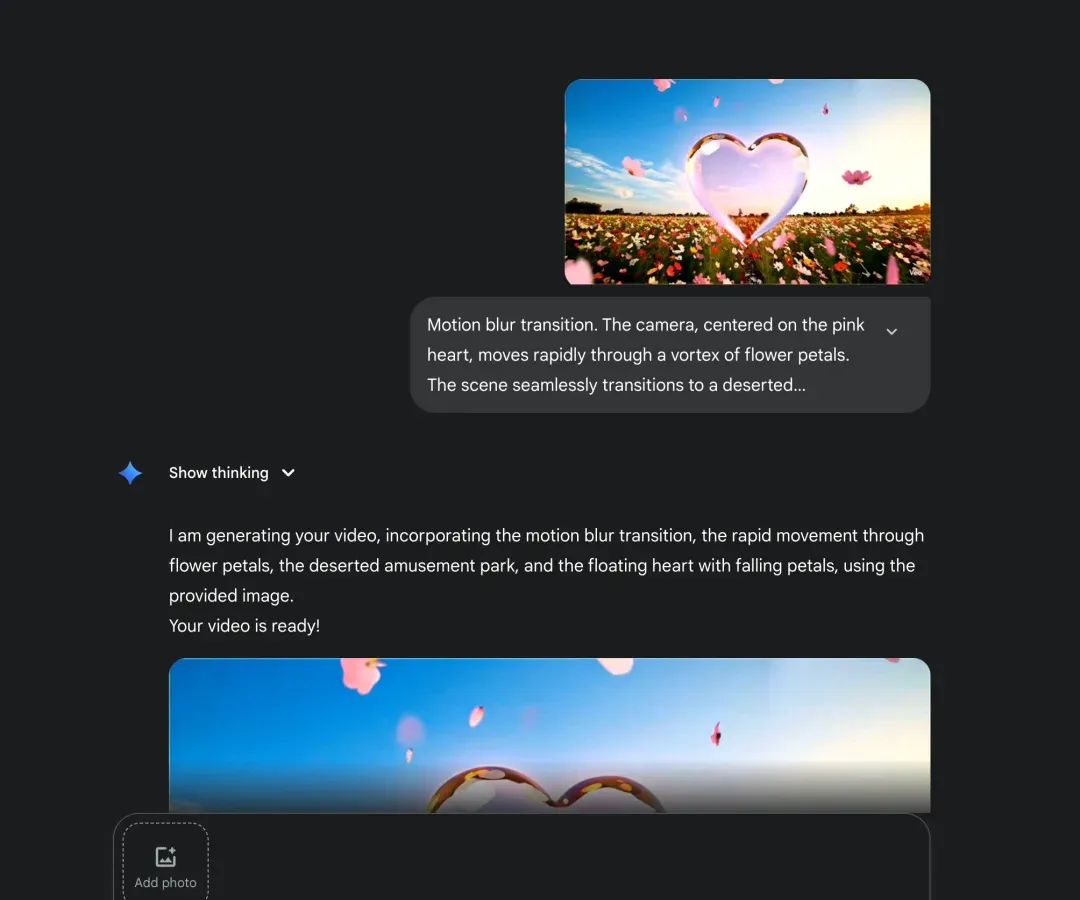

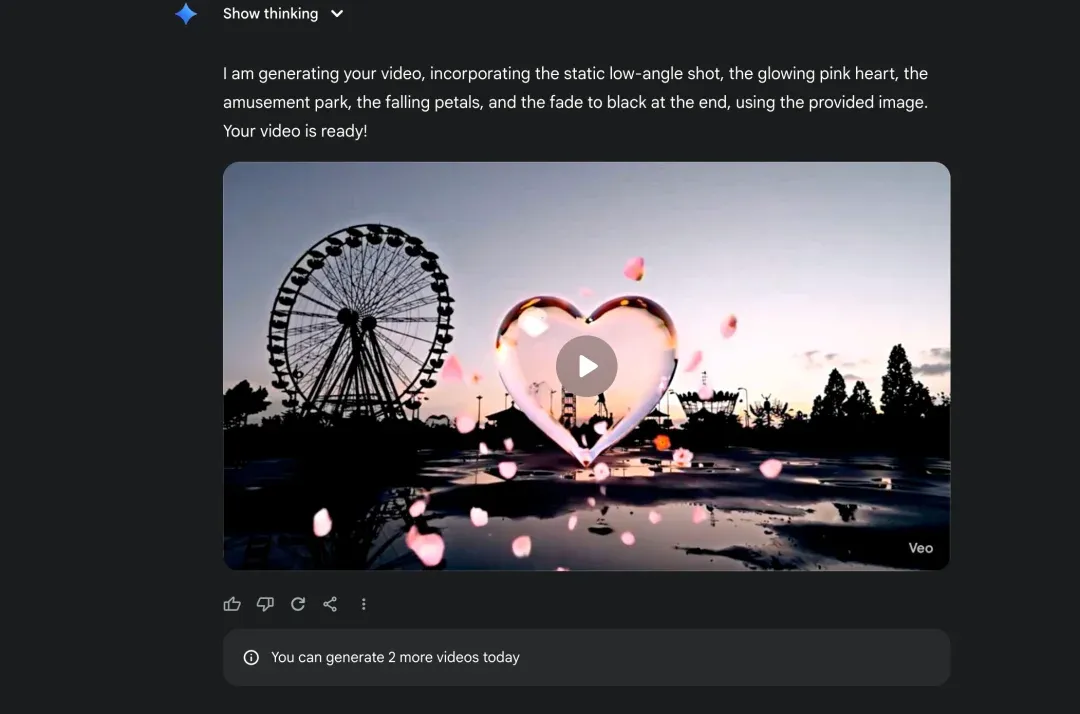

Before diving into the step-by-step details, let’s see what this “fully automated” pipeline actually produces.

The video below was created using this article’s workflow, referencing a viral AI video found online, generated in “one shot.” Note: throughout the entire process, I made zero manual edits to AI-generated prompts and didn’t do multiple “rolls” to select the best outcome.

See that? This isn’t simple “imitation” but “re-creation” after understanding the original’s visual language and narrative rhythm. And it all comes from deep mining and engineering application of Gemini’s native multimodal capabilities. Next, I’ll fully deconstruct how I did it.

3. Seven Steps to Replicate a Viral Hit

Below, I’ll use a specific case to fully demonstrate this workflow. Our goal: input a viral video we want to imitate, output a stylistically similar but entirely new work.

Steps 1 & 2: Video Upload and Shot Breakdown

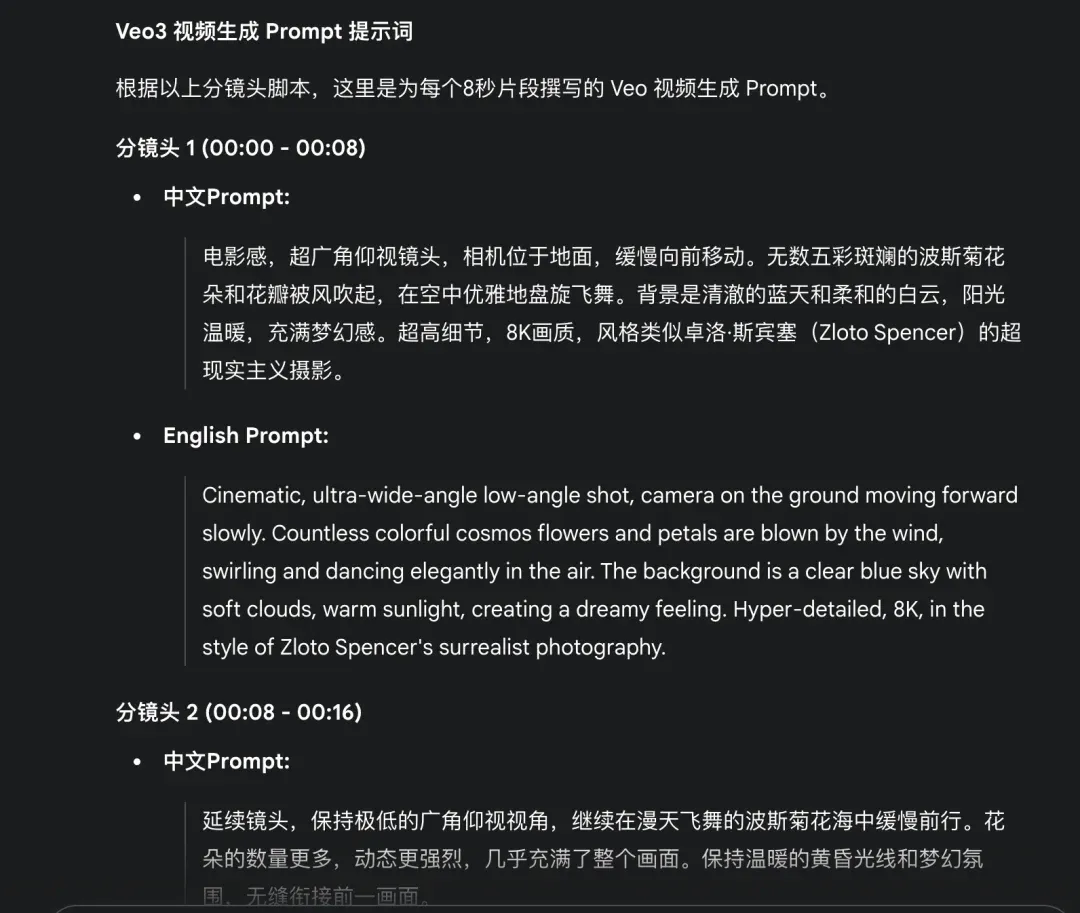

First, download your target video. Then send the video file directly to Gemini 2.5 Pro. Next comes the critical first step — reverse engineering. I instructed:

“Please break down this video by shots, using the 8-second principle, generating a script for each shot.”

Mr. Guo’s Note: The “8-second principle” is forward-looking design. Since current mainstream text-to-video models (like Veo) have limited single-generation duration, splitting by this principle in advance perfectly prepares for subsequent video generation.

Step 3: Writing Generative Prompts

After getting shot scripts, the next step is having Gemini transform these descriptive scripts into visually evocative prompts that text-to-video models can execute. Instructions:

“Great, now based on the above shot scripts, please write text-to-video prompts for each segment.”

Steps 4 & 5: Chain Generation and Visual Continuity

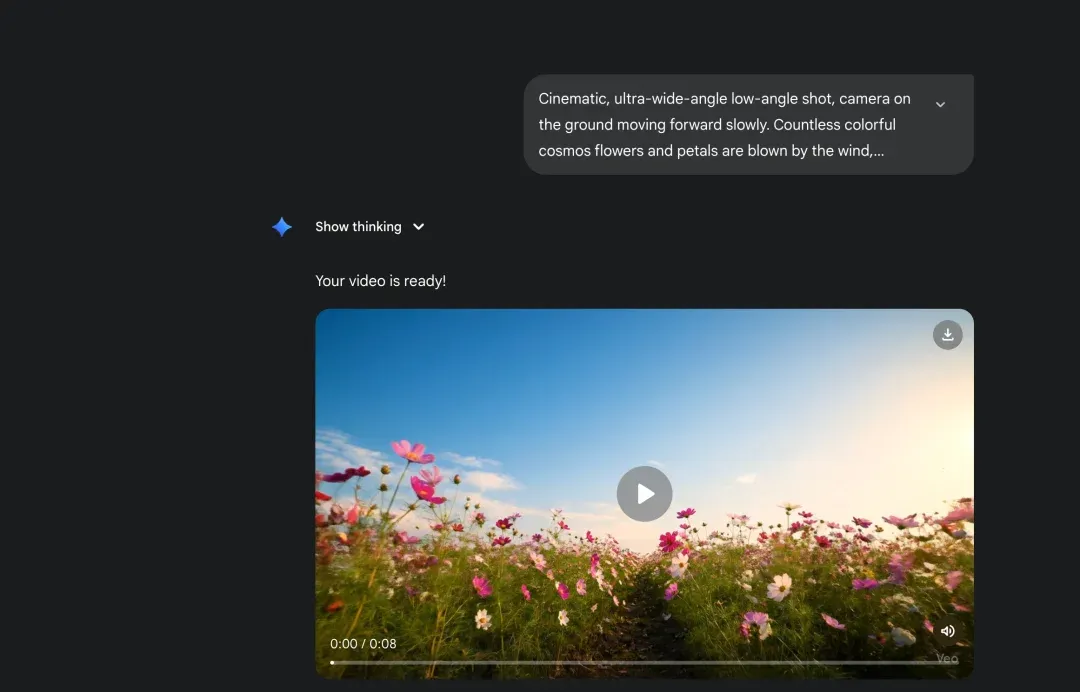

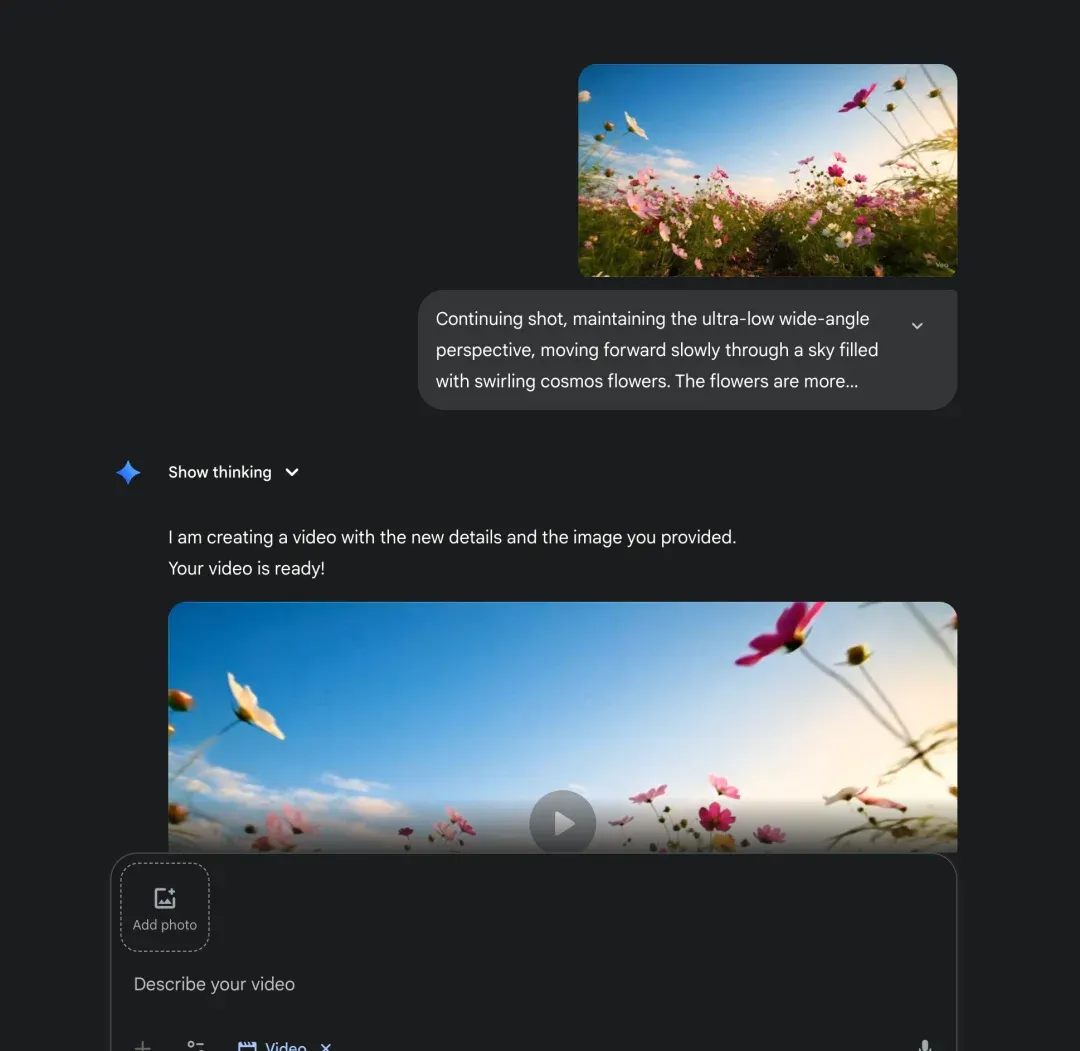

This is the core technique for ensuring video coherence. We don’t generate all segments at once but use “chain generation”:

-

Use the first prompt to generate the first video segment.

-

Screenshot the last frame of the first video as the first frame reference for the second video.

- Input the second prompt with this reference image attached, generating the second video segment.

- Repeat until all segments are generated.

Mr. Guo’s Note: In this experiment, I even skipped providing first-frame images, wanting to fully test Gemini’s creativity. Results were still good. But for ultimate continuity, “tail becomes head” is best practice.

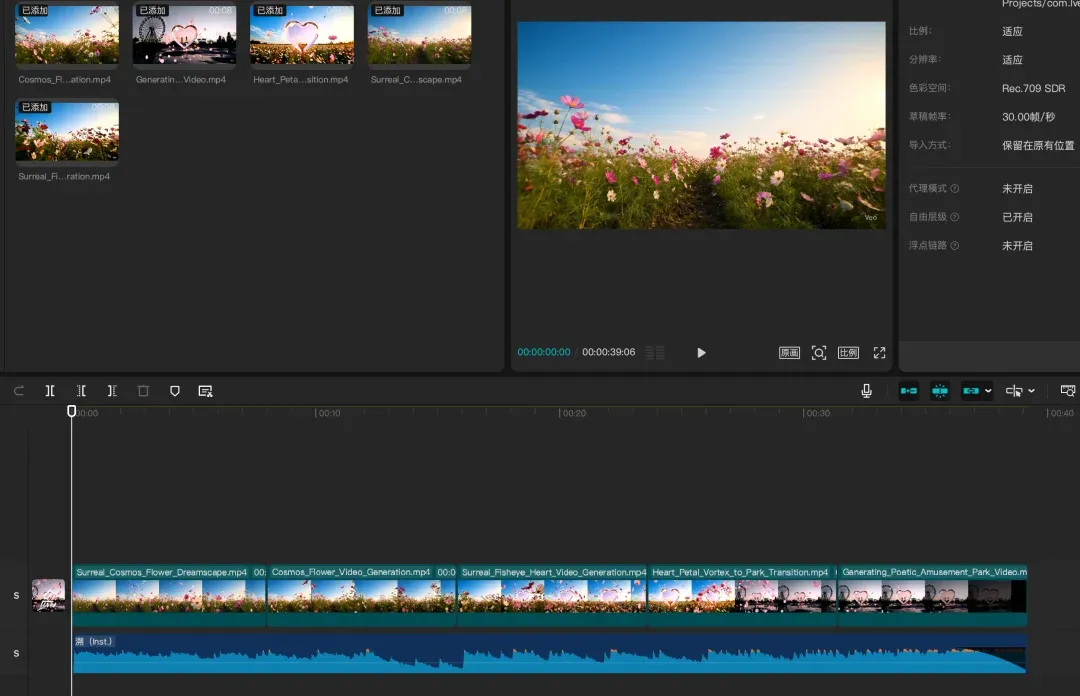

PS: I was clever here — Gemini Pro lets you generate 3 videos per day using VEO3, and my quota refreshes at 11:24 AM. I started this workflow at 11:20, so after using yesterday’s 3 videos, my quota refreshed just in time to complete all five videos in one go!

Steps 6 & 7: Assembly and Final Product

Final step: import all generated segments into CapCut or any editing tool you’re familiar with for simple splicing, music, and color grading — and a brand new work is born.

4. Why This Workflow Is a “Game Changer”

This workflow’s disruptiveness lies in its “automation” level and “engineering” mindset. Throughout my entire experiment:

-

Near-zero manual intervention: I made no modifications to Gemini-generated prompts.

-

No multi-roll selection: All video segments were single-generation — what you see is what you get.

This means if we package this process into workflow orchestration tools like Dify, theoretically: the only manual work is inputting a reference video link, then receiving a finished video. This isn’t simple tool efficiency — it’s complete transformation of production methods.

5. The Underestimated “Native Multimodal” Giant

“Video replication” is just an entry point. What it reveals is an extremely powerful yet often overlooked core capability of Gemini — Native Multimodality. I believe this is Gemini’s true “moat” and its fundamental differentiation from other models.

Many models handle multimodal tasks more like “stitching” — a text model with a vision module bolted on. Gemini was built for multimodality from the start. This means it’s not “seeing images then talking about them” but truly “understanding” the intricate relationships between image, audio, and text within a unified cognitive framework. It understands emotional transitions, camera language, and narrative rhythm in videos — not just identifying objects in frames.

1. Ecosystem as Moat: Direct YouTube Connection Gemini’s direct YouTube ecosystem connection isn’t just about skipping a “Youtube to text” tool installation. It’s like having an API to humanity’s largest video knowledge base. Any public video — tech talks, product reviews, market trend analyses — instantly transforms from an “information island” into a “data source” you can invoke, analyze, and re-create anytime.

2. Mr. Guo’s Practice: Information Processing Dimension Reduction When writing my analysis of Claude’s official August 1st technical talk, I personally experienced this dimension reduction. I fed a 30+ minute video directly to Gemini for analysis — it precisely extracted core insights and timestamps. For testing, I used AI Studio, consuming about 450,000 tokens. This shows Google’s generosity — the free quota handles two such videos daily. This efficient processing of massive unstructured video information is incomparable for pure text models.

6. Next Steps: From “Workflow” to “Automated Agent”

The current “semi-automated” workflow is powerful enough, but as a Vibe Coder, I’m always thinking about how to make it “lazier” and smarter. My next plan is using Gemini CLI instead of web Gemini, leveraging its powerful Agent tool-calling and MCP (Model Control Protocol) capabilities to conceive a true “Video-to-X” automated agent. Imagine: just input one terminal command to automatically transform a video link into a polished PPT presentation. That’s the ultimate vision of the AI Agent era.

7. Conclusion: Your Next Product Starts with Deconstructing a Video

In summary, this workflow’s core philosophy is “deconstruction and reconstruction.” Gemini’s powerful multimodal capabilities give us unprecedented “deconstruction” ability — breaking any video work into analyzable elements. Powerful text-to-video models then give us efficient “reconstruction” tools.

This is extremely useful for viral video analysis in cross-border e-commerce. To my knowledge, many SaaS products serving sellers in video ad analysis and influencer content analysis use Gemini’s API.

Your next viral product might come from deeply deconstructing a video.

Found Mr. Guo’s analysis insightful? Drop a 👍 and share with more friends who need it!

Follow my channel to explore AI, going global, and digital marketing’s infinite possibilities together.

🌌 When creativity can be industrialized, imagination becomes the only boundary.